OpenAI Timeline

This timeline features key events in the history of OpenAI. This is a “living document” as we keep expanding it over time with new events in the history of OpenAI.

“OpenAI is an American artificial intelligence research laboratory consisting of the non-profit OpenAI Incorporated and its for-profit subsidiary corporation OpenAI Limited Partnership. OpenAI conducts AI research with the declared intention of promoting and developing a friendly AI.”

2015-12-10 … OpenAI is founded in San Francisco by Sam Altman, Reid Hoffman, Jessica Livingston, Elon Musk, Ilya Sutskever, Peter Thiel, and others.

2018 … GPT is introduced, GPT (i.e. GPT-1) is a large language model (LLM) with 117 million parameters trained on a dataset of about 8 million web pages.

Read the paper “Improving Language Understanding by Generative Pre-Training“ (by Alec Radford, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever).

”Natural language understanding comprises a wide range of diverse tasks such as textual entailment, question answering, semantic similarity assessment, and document classification. Although large unlabeled text corpora are abundant, labeled data for learning these specific tasks is scarce, making it challenging for discriminatively trained models to perform adequately. We demonstrate that large gains on these tasks can be realized by generative pre-training of a language model on a diverse corpus of unlabeled text, followed by discriminative fine-tuning on each specific task. …”

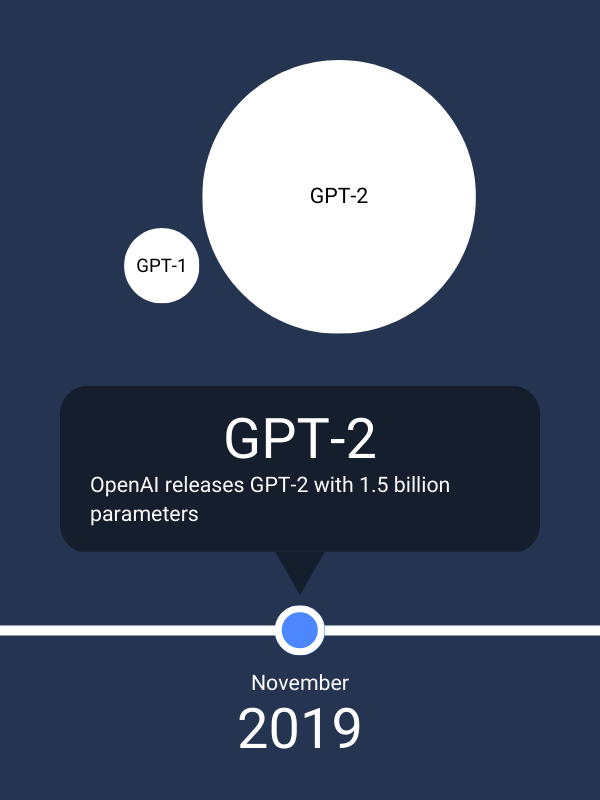

2019 November … GPT-2, a more powerful version of GPT-1, is released. It too is an LLM and was trained on a much larger dataset of approximately 40 GB of text and has about 1.5 billion parameters.

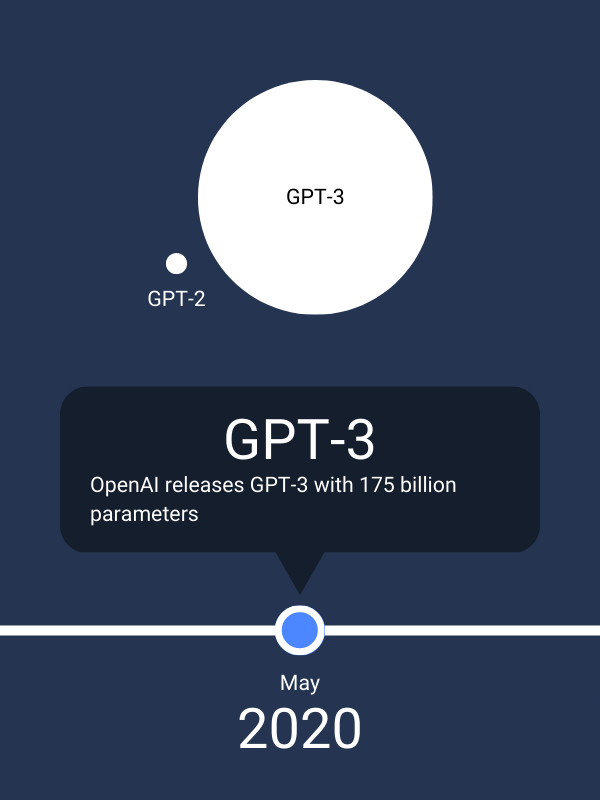

2020 May … GPT-3 is released. It is significantly larger and more powerful & capable than GPT-2. It was trained on approximately 570 GB of text and has 175 billion parameters.

2021 … Codex is released, a large language model trained on code (software) that has 6 billion parameters based on 800 million lines of code. Codex powers GitHub Copilot, a code generation & completion tool.

2022-09-21 … open-sourcing of a neural net called Whisper that approaches human-level robustness and accuracy in English speech recognition.

Find out more about Whisper at https://openai.com/research/whisper.

Use an audio file transcription space we developed on Huggingface 🤗 with OpenAI’s Whisper model for speech recognition here.

Use a YouTube video transcription space we developed on Huggingface 🤗 with Whisper here (all you need is the YouTube URL).

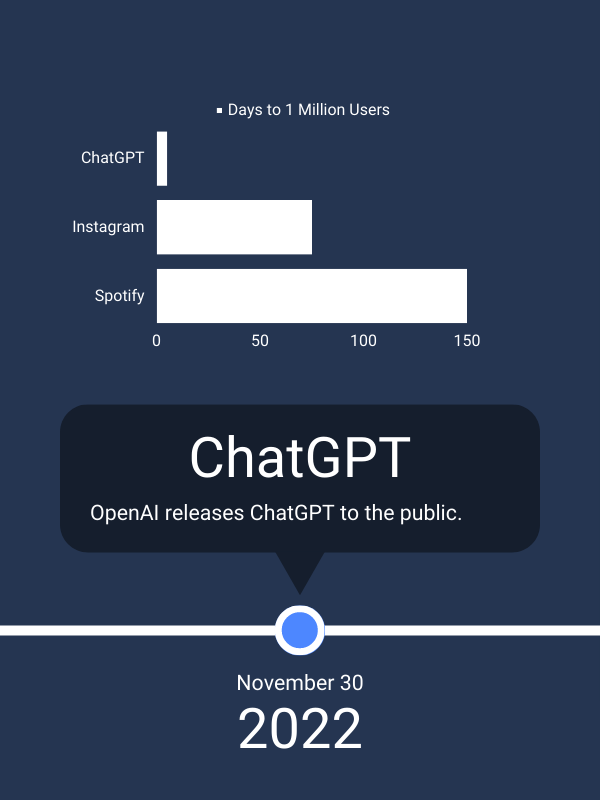

2022 … ChatGPT, an updated & enhanced version of GPT-3 - referred to as GPT-3.5, is released. The amount of data that was used to train ChatGPT has not been disclosed.

ChatGPT surprised everyone with how well it works.

For a detailed list of all OpenAI research results published, please visit https://openai.com/research.